ZFS on a single core RISC-V hardware with 512MB (Sipeed Lichee RV D1)

Is this feasible?

Yes it is! OpenZFS on Linux compiled from sources for RISC-V, it will work with less than 250MB of RAM for a nearly fully filled 2TB zfs volume with 225.000 files!

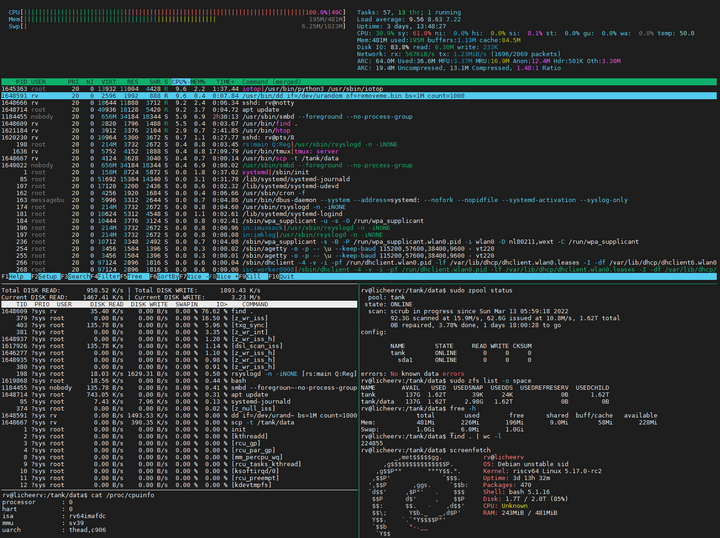

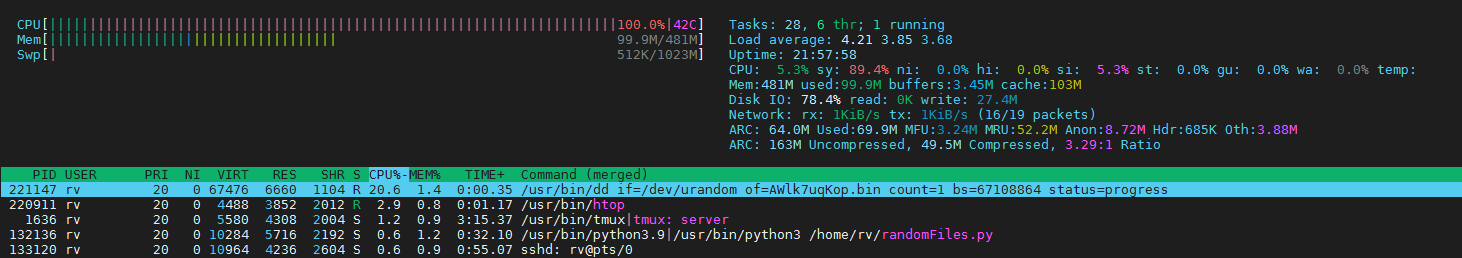

Even stressing the system and run in parallel (via Wifi): a scp copy (with 650kB/s), a samba transfer (with 1.3MB/s), writing random data with dd on disk (with 2.8MB/s), finding files, running apt update, zfs auto snapshot and doing a zpool scrub, the board needs well below 450MB of RAM.

My conclusion

Linux and OpenZFS are an engineering marvel! And the OpenZFS community has ported it successfully to RISC-V hardware, considering in particular that there isn't a lot of hardware available yet. Well done Linux and OpenZFS community!

...and the recommendation of 1GB of RAM per TB of storage?

I think I showed that having 256MB per TB is feasible -and probably one can manage even more TB of storage with the same amount of memory- hence you don't need 1GB of RAM per TB, but you might want to have more for performance reasons.

[Update-2022-03-13] As I learned from Durval Menezes on the zfsonlinux forum, the: "1GB RAM per 1TB Disk" rule used to be the case until a few years ago (around ZFS 0.7.x-0.8.x IIRC), but then our cherished devs did a round of specific memory optimizations and since then it's no longer the case. [/Update]

The details

Below I have some more details on how I have configured the system, including some tricks I had to apply to get ZFS running on limited memory without getting a kernel panic, the performances I measured and how I have build the ZFS kernel module and tools on the Sipeed Lichee RV RISC-V board.

Performances

To estimate the maximum read/write speeds (in MB/s) I used 1GB sequential reads/writes on the Sipeed Lichee RV carrier board with RISC-V D1 processor. 1GB was chosen to ensure that any possible caching will not have a big impact on the measurements (the board has only 512MB of RAM).

| Write zeros |

random data | Read zeros |

random data |

|

| 256GB SSD via USB2 | ||||

| ext4 | 35.2 | 12.4 | 41.3 | 40.9 |

| zfs | 38.2 | 15.1 | 42.0 | 41.7 |

| zfs native encryption | 4.0 | 3.0 | 3.7 | 3.7 |

| LUKS + zfs | 8.0 | 5.7 | 7.6 | 7.6 |

| 2TB HDD via USB2 | ||||

| zfs | 22.5 | 13.3 | 27.1 | 26.1 |

| Internal SD card (MMC) | ||||

| ext4 | 12.8 | 10.4 | 12.1 | 12.1 |

It is impressive that zfs is more performant than ext4. However, the native encryption of ZFS lags much behind the combination LUKS+zfs. This is not specific to the RISC-V implementation, from my experience this is also the case on Intel architecture.

The tricks

ZFS ARC size

The ZFS kernel modue by default allocates half of the system RAM for caching/buffers. This would amounts to 256MB for the Lichee RV board. One can query the min and max ZFS ARC sizes directly from the kernel module

cat /sys/module/zfs/parameters/zfs_arc_min

cat /sys/module/zfs/parameters/zfs_arc_max

or get a summary with the arc_summary tool

arc_summary -s arc

The sizes can be adapted, either by defining them in /etc/modprobe.d/zfs.conf, or by updating them in the running system with

sudo echo size_in_bytes >> /sys/module/zfs/parameters/zfs_arc_max

It seems that the lowest value one can set is 64MB (67108864 bytes). Hence this is what I have used in the tests above.

Dropping caches

Reducing the ZFS caches helps already a lot, but to ensure that the kernel memory will not get fragmented and as a consequence the system runs out of memory, one can use a feature that tells the kernel to drop the caches. A crontab did the job on the RISC-V board by having the following line in the root crontab (dropping every 10min the caches):

*/10 * * * * /usr/bin/sync; /usr/bin/echo 3 > /proc/sys/vm/drop_caches

This approach was needed to prevent kernel panics, before I discoved that one can reduce the ZFS caches. But honestly it doesn't hurt - except that one might get less performance. I didn't mind, since this whole process was more to experiment than really setting up a NAS based on RISC-V. Althought it is not impossible to use this board as a NAS, if you don't mind maybe a bit lower transfer speeds than you normally might expect (I get around 12MB/s via samba from an unencrypted ZFS volume).

How to build OpenZFS on RISC-V

As basis I have used the boot software and Debian root file system which I had documented in my other blog post.

On Debian unstable (at time of writing, March 2022: bookworm/sid), OpenZFS is not yet available as debian package (zfsutils-linux). Hence you have to compile and build OpenZFS yourself.

Unfortunately OpenZFS seems not to support (yet) cross-compiling for the RISC-V platform, hence you have to build the kernel on the RISC-V board. But since the ZFS kernel module has to be compiled with the same compiler as the kernel -and I used gcc 9 to cross-compile the kernel and gcc 11 is provided with Debian bookworm/sid- I had also to recompile the Linux kernel on this single core RISC-V processor. This took 4.5h, but ok, I really wanted to get ZFS running :-).

To build, you should follow the instructions given by OpenZFS which first tells you the packages that are needed

sudo apt install build-essential autoconf automake libtool gawk alien fakeroot dkms libblkid-dev uuid-dev libudev-dev libssl-dev zlib1g-dev libaio-dev libattr1-dev libelf-dev python3 python3-dev python3-setuptools python3-cffi libffi-dev python3-packaging git libcurl4-openssl-dev

then you clone the repository

git clone https://github.com/openzfs/zfs.git

and execute

cd ./zfs

git checkout master

sh autogen.sh

./configure --with-linux=/your/kernel/directory

make -s -j$(nproc)

Finall you can install the kernel module

sudo make install; sudo ldconfig; sudo depmod

To test the module, you can run

modprobe zfs

and if successful, you can add a new line in /etc/modules with "zfs". Done!

ZFS auto snapshot

Since the debian package zfs-auto-snapshot is not yet available on RISC-V, probably due to its dependency to zfsutils-linux, I downloaded it from zfsonlinux/zfs-auto-snapshot and followed the instruction in the README:

wget https://github.com/zfsonlinux/zfs-auto-snapshot/archive/upstream/1.2.4.tar.gz

tar -xzf 1.2.4.tar.gz

cd zfs-auto-snapshot-upstream-1.2.4

make install

This will create backups every 15min (4x), hourly (24x), daily (31x), weekly(8x) and monthly (12x).